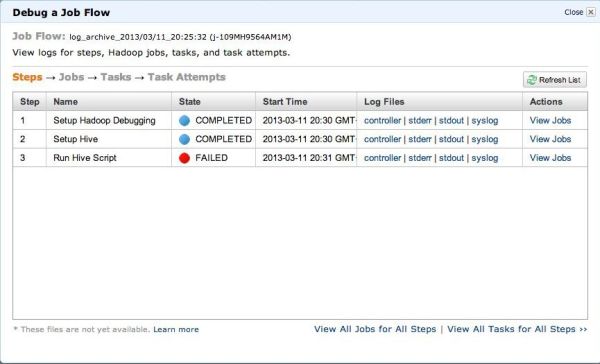

EMRを使用していてステップ途中でエラーが発生した場合、デバッグ用のログ出力先を設定すると実行ログを

AWSコンソール上で確認することができます。

例として、S3のログバケットをまとめるHiveScriptを動かした時のエラーを見てみます。

上記の図にあるようにエラーが発生しています。

LogURIを指定していると、このようにコンソールからLogFileとして確認することができます。

エラーが起こった3番目のステップのそれぞれのリンクを見てみると以下のようになっています。

・controller

2013-03-11T11:31:41.005Z INFO Fetching jar file.

2013-03-11T11:31:48.146Z INFO Working dir /mnt/var/lib/hadoop/steps/3

2013-03-11T11:31:48.146Z INFO Executing /usr/lib/jvm/java-6-sun/bin/java -cp /home/hadoop/conf:/usr/lib/jvm/java-6-sun/lib/tools.jar:/home/hadoop:/home/hadoop/hadoop-core.jar:/home/hadoop/hadoop-tools.jar:/home/hadoop/hadoop-core-0.20.205.jar:/home/hadoop/hadoop-tools-0.20.205.jar:/home/hadoop/lib/*:/home/hadoop/lib/jetty-ext/* -Xmx1000m -Dhadoop.log.dir=/mnt/var/log/hadoop/steps/3 -Dhadoop.log.file=syslog -Dhadoop.home.dir=/home/hadoop -Dhadoop.id.str=hadoop -Dhadoop.root.logger=INFO,DRFA -Djava.io.tmpdir=/mnt/var/lib/hadoop/steps/3/tmp -Djava.library.path=/home/hadoop/native/Linux-i386-32 org.apache.hadoop.util.RunJar /mnt/var/lib/hadoop/steps/3/script-runner.jar s3://ap-northeast-1.elasticmapreduce/libs/hive/hive-script --run-hive-script --base-path s3://ap-northeast-1.elasticmapreduce/libs/hive/ --hive-versions latest --args -f s3n://memorycraft-archive/script/s3.hql -d INPUT_BUCKET_LOCATION=s3://memorycraft-log/logs/ -d OUTPUT_BUCKET_LOCATION=s3://memorycraft-archive/rslt/ -d YYYY=2013 -d MM=03 -d DD=04

2013-03-11T11:34:20.383Z INFO Execution ended with ret val 255

2013-03-11T11:34:20.384Z WARN Step failed with bad retval

2013-03-11T11:34:26.454Z INFO Step created jobs: job_201303111128_0001

・stderr

Logging initialized using configuration in file:/home/hadoop/.versions/hive-0.8.1/conf/hive-log4j.properties

Hive history file=/mnt/var/lib/hive_081/tmp/history/hive_job_log_hadoop_201303111132_1431275973.txt

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/.versions/hive-0.8.1/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

OK

Time taken: 13.475 seconds

OK

Time taken: 0.765 seconds

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapred.reduce.tasks=

Starting Job = job_201303111128_0001, Tracking URL = http://ip-10-121-17-157.ap-northeast-1.compute.internal:9100/jobdetails.jsp?jobid=job_201303111128_0001

Kill Command = /home/hadoop/bin/hadoop job -Dmapred.job.tracker=10.121.17.157:9001 -kill job_201303111128_0001

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2013-03-11 11:33:11,849 Stage-1 map = 0%, reduce = 0%

2013-03-11 11:34:12,380 Stage-1 map = 0%, reduce = 0%

2013-03-11 11:34:19,431 Stage-1 map = 100%, reduce = 100%

Ended Job = job_201303111128_0001 with errors

Error during job, obtaining debugging information...

Examining task ID: task_201303111128_0001_m_000002 (and more) from job job_201303111128_0001

Exception in thread "Thread-47" java.lang.RuntimeException: Error while reading from task log url

at org.apache.hadoop.hive.ql.exec.errors.TaskLogProcessor.getErrors(TaskLogProcessor.java:130)

at org.apache.hadoop.hive.ql.exec.JobDebugger.showJobFailDebugInfo(JobDebugger.java:211)

at org.apache.hadoop.hive.ql.exec.JobDebugger.run(JobDebugger.java:81)

at java.lang.Thread.run(Thread.java:662)

Caused by: java.io.IOException: Server returned HTTP response code: 400 for URL: http://10.122.24.232:9103/tasklog?taskid=attempt_201303111128_0001_m_000000_1&start=-8193

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1436)

at java.net.URL.openStream(URL.java:1010)

at org.apache.hadoop.hive.ql.exec.errors.TaskLogProcessor.getErrors(TaskLogProcessor.java:120)

... 3 more

Counters:

FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.MapRedTask

MapReduce Jobs Launched:

Job 0: Map: 1 Reduce: 1 HDFS Read: 0 HDFS Write: 0 FAIL

Total MapReduce CPU Time Spent: 0 msec

Command exiting with ret '255'

・stdout

Downloading 's3://ap-northeast-1.elasticmapreduce/libs/hive/hive-script' to '/mnt/var/lib/hadoop/steps/3/.'

2013-03-11 11:31:52 GMT - INFO Running: /home/hadoop/.versions/hive-0.8.1/bin/hive '-f' 's3n://memorycraft-archive/script/s3.hql' '-d' 'INPUT_BUCKET_LOCATION=s3://memorycraft-log/logs/' '-d' 'OUTPUT_BUCKET_LOCATION=s3://memorycraft-archive/rslt/' '-d' 'YYYY=2013' '-d' 'MM=03' '-d' 'DD=04'

2013-03-11 11:34:20 GMT - ERROR Error executing cmd: /home/hadoop/.versions/hive-0.8.1/bin/hive '-f' 's3n://memorycraft-archive/script/s3.hql' '-d' 'INPUT_BUCKET_LOCATION=s3://memorycraft-log/logs/' '-d' 'OUTPUT_BUCKET_LOCATION=s3://memorycraft-archive/rslt/' '-d' 'YYYY=2013' '-d' 'MM=03' '-d' 'DD=04'

・syslog

2013-03-11 11:31:52,225 INFO org.apache.hadoop.fs.s3native.NativeS3FileSystem (main): Opening '/libs/hive/hive-script' for reading

詳細に出力されているのはstderrのようですが、これだけではよくわかりません。

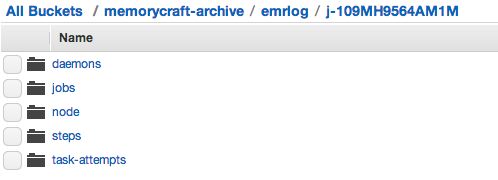

というのもAWSコンソール上で確認できるEMRログはこれで全てではなく、詳細なタスクのログが

EMRログバケットに出力されています。

EMRログバケットを見てみます。

ジョブフローIDごとにフォルダがあり、その中のstepsというフォルダが、AWSコンソールで表示されるログが

入っています。

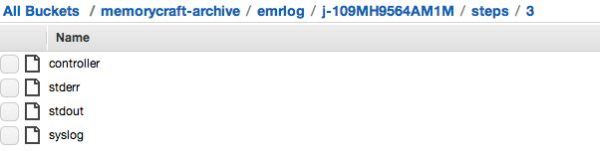

steps/3 以下に先ほどのログファイルが存在しています。

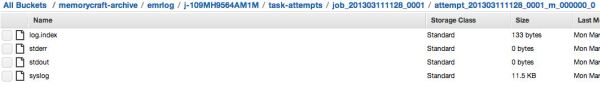

更に詳しい実行タスクのログは、task-attemptsというフォルダに詳細なログが出力されています。

このtask-attempts以下の各サブフォルダにあるsyslogというファイルに詳細なログが出力されています。

・task-attempts/job_201303111128_0001/attempt_201303111128_0001_m_000000_0/syslog

2013-03-11 11:33:13,112 INFO org.apache.hadoop.util.NativeCodeLoader (main): Loaded the native-hadoop library

2013-03-11 11:33:13,268 INFO org.apache.hadoop.mapred.TaskRunner (main): Creating symlink: /mnt/var/lib/hadoop/mapred/taskTracker/distcache/-5343292120509370400_-2048022790_1496912286/10.121.17.157/mnt/var/lib/hive_081/tmp/scratch/hive_2013-03-11_11-32-20_837_75945303502865249/-mr-10001/3d096d97-37e9-47c7-a184-8152010d592a 2013-03-11 11:33:13,354 INFO org.apache.hadoop.filecache.TrackerDistributedCacheManager (main): Creating symlink: /mnt/var/lib/hadoop/mapred/taskTracker/hadoop/jobcache/job_201303111128_0001/jars/job.jar 2013-03-11 11:33:13,362 INFO org.apache.hadoop.filecache.TrackerDistributedCacheManager (main): Creating symlink: /mnt/var/lib/hadoop/mapred/taskTracker/hadoop/jobcache/job_201303111128_0001/jars/.job.jar.crc 2013-03-11 11:33:13,370 INFO org.apache.hadoop.filecache.TrackerDistributedCacheManager (main): Creating symlink: /mnt/var/lib/hadoop/mapred/taskTracker/hadoop/jobcache/job_201303111128_0001/jars/META-INF 2013-03-11 11:33:13,378 INFO org.apache.hadoop.filecache.TrackerDistributedCacheManager (main): Creating symlink: /mnt/var/lib/hadoop/mapred/taskTracker/hadoop/jobcache/job_201303111128_0001/jars/org 2013-03-11 11:33:13,386 INFO org.apache.hadoop.filecache.TrackerDistributedCacheManager (main): Creating symlink: /mnt/var/lib/hadoop/mapred/taskTracker/hadoop/jobcache/job_201303111128_0001/jars/hive-exec-log4j.properties 2013-03-11 11:33:13,430 INFO org.apache.hadoop.filecache.TrackerDistributedCacheManager (main): Creating symlink: /mnt/var/lib/hadoop/mapred/taskTracker/hadoop/jobcache/job_201303111128_0001/jars/javaewah 2013-03-11 11:33:13,838 WARN org.apache.hadoop.metrics2.impl.MetricsSystemImpl (main): Source name ugi already exists!

2013-03-11 11:33:14,037 INFO org.apache.hadoop.mapred.MapTask (main): Host name: ip-10-122-12-88.ap-northeast-1.compute.internal

2013-03-11 11:33:14,088 INFO org.apache.hadoop.util.ProcessTree (main): setsid exited with exit code 0

2013-03-11 11:33:14,106 INFO org.apache.hadoop.mapred.Task (main): Using ResourceCalculatorPlugin : org.apache.hadoop.util.LinuxResourceCalculatorPlugin@1dfd868

2013-03-11 11:33:14,342 INFO com.hadoop.compression.lzo.GPLNativeCodeLoader (main): Loaded native gpl library

2013-03-11 11:33:14,358 WARN com.hadoop.compression.lzo.LzoCodec (main): Could not find build properties file with revision hash

2013-03-11 11:33:14,358 INFO com.hadoop.compression.lzo.LzoCodec (main): Successfully loaded & initialized native-lzo library [hadoop-lzo rev UNKNOWN]

2013-03-11 11:33:14,367 WARN org.apache.hadoop.io.compress.snappy.LoadSnappy (main): Snappy native library is available

2013-03-11 11:33:14,367 INFO org.apache.hadoop.io.compress.snappy.LoadSnappy (main): Snappy native library loaded

2013-03-11 11:33:14,452 WARN org.apache.hadoop.hive.conf.HiveConf (main): hive-site.xml not found on CLASSPATH

2013-03-11 11:33:16,876 INFO org.apache.hadoop.fs.s3native.NativeS3FileSystem (main): Opening 's3://memorycraft-log/logs/2013-03-04-09-16-51-45565F6169B3E2F2' for reading

2013-03-11 11:33:17,238 INFO org.apache.hadoop.io.retry.RetryInvocationHandler (main): Exception while invoking retrievePair of class org.apache.hadoop.fs.s3native.Jets3tNativeFileSystemStore. Not retrying.Status Code: 403, AWS Service: Amazon S3, AWS Request ID: 2981A88871482E0B, AWS Error Code: InvalidObjectState, AWS Error Message: The operation is not valid for the object's storage class, S3 Extended Request ID: 7yHCAsfLHZKbf+5ojWMS9q2B41bBwizoqW4zldSdfEpLoLrKvI9X1WI0brHGsC49

at com.amazonaws.http.AmazonHttpClient.handleErrorResponse(AmazonHttpClient.java:548)

at com.amazonaws.http.AmazonHttpClient.executeHelper(AmazonHttpClient.java:288)

at com.amazonaws.http.AmazonHttpClient.execute(AmazonHttpClient.java:170)

at com.amazonaws.services.s3.AmazonS3Client.invoke(AmazonS3Client.java:2619)

at com.amazonaws.services.s3.AmazonS3Client.getObject(AmazonS3Client.java:809)

at org.apache.hadoop.fs.s3native.Jets3tNativeFileSystemStore.retrievePair(Jets3tNativeFileSystemStore.java:266)

at org.apache.hadoop.fs.s3native.Jets3tNativeFileSystemStore.retrievePair(Jets3tNativeFileSystemStore.java:252)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:82)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:59)

at org.apache.hadoop.fs.s3native.$Proxy7.retrievePair(Unknown Source)

at org.apache.hadoop.fs.s3native.NativeS3FileSystem.open(NativeS3FileSystem.java:1002)

at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:420)

at org.apache.hadoop.mapred.LineRecordReader.(LineRecordReader.java:78)

at org.apache.hadoop.mapred.TextInputFormat.getRecordReader(TextInputFormat.java:51)

at org.apache.hadoop.hive.ql.io.CombineHiveRecordReader.(CombineHiveRecordReader.java:65)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:39)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:27)

at java.lang.reflect.Constructor.newInstance(Constructor.java:513)

at org.apache.hadoop.hive.shims.HadoopShimsSecure$CombineFileRecordReader.initNextRecordReader(HadoopShimsSecure.java:331)

at org.apache.hadoop.hive.shims.HadoopShimsSecure$CombineFileRecordReader.(HadoopShimsSecure.java:292)

at org.apache.hadoop.hive.shims.HadoopShimsSecure$CombineFileInputFormatShim.getRecordReader(HadoopShimsSecure.java:406)

at org.apache.hadoop.hive.ql.io.CombineHiveInputFormat.getRecordReader(CombineHiveInputFormat.java:549)

at org.apache.hadoop.mapred.MapTask$TrackedRecordReader.(MapTask.java:199)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:423)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:377)

at org.apache.hadoop.mapred.Child$4.run(Child.java:255)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1059)

at org.apache.hadoop.mapred.Child.main(Child.java:249)

2013-03-11 11:33:17,315 INFO org.apache.hadoop.mapred.TaskLogsTruncater (main): Initializing logs' truncater with mapRetainSize=-1 and reduceRetainSize=-1

2013-03-11 11:33:17,452 INFO org.apache.hadoop.io.nativeio.NativeIO (main): Initialized cache for UID to User mapping with a cache timeout of 14400 seconds.

2013-03-11 11:33:17,453 INFO org.apache.hadoop.io.nativeio.NativeIO (main): Got UserName hadoop for UID 106 from the native implementation

2013-03-11 11:33:17,456 WARN org.apache.hadoop.mapred.Child (main): Error running child

java.io.IOException: java.lang.reflect.InvocationTargetException

at org.apache.hadoop.hive.io.HiveIOExceptionHandlerChain.handleRecordReaderCreationException(HiveIOExceptionHandlerChain.java:97)

at org.apache.hadoop.hive.io.HiveIOExceptionHandlerUtil.handleRecordReaderCreationException(HiveIOExceptionHandlerUtil.java:57)

at org.apache.hadoop.hive.shims.HadoopShimsSecure$CombineFileRecordReader.initNextRecordReader(HadoopShimsSecure.java:345)

at org.apache.hadoop.hive.shims.HadoopShimsSecure$CombineFileRecordReader.(HadoopShimsSecure.java:292)

at org.apache.hadoop.hive.shims.HadoopShimsSecure$CombineFileInputFormatShim.getRecordReader(HadoopShimsSecure.java:406)

at org.apache.hadoop.hive.ql.io.CombineHiveInputFormat.getRecordReader(CombineHiveInputFormat.java:549)

at org.apache.hadoop.mapred.MapTask$TrackedRecordReader.(MapTask.java:199)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:423)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:377)

at org.apache.hadoop.mapred.Child$4.run(Child.java:255)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1059)

at org.apache.hadoop.mapred.Child.main(Child.java:249)

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:39)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:27)

at java.lang.reflect.Constructor.newInstance(Constructor.java:513)

at org.apache.hadoop.hive.shims.HadoopShimsSecure$CombineFileRecordReader.initNextRecordReader(HadoopShimsSecure.java:331)

... 11 more

Caused by: Status Code: 403, AWS Service: Amazon S3, AWS Request ID: 2981A88871482E0B, AWS Error Code: InvalidObjectState, AWS Error Message: The operation is not valid for the object's storage class, S3 Extended Request ID: 7yHCAsfLHZKbf+5ojWMS9q2B41bBwizoqW4zldSdfEpLoLrKvI9X1WI0brHGsC49

at com.amazonaws.http.AmazonHttpClient.handleErrorResponse(AmazonHttpClient.java:548)

at com.amazonaws.http.AmazonHttpClient.executeHelper(AmazonHttpClient.java:288)

at com.amazonaws.http.AmazonHttpClient.execute(AmazonHttpClient.java:170)

at com.amazonaws.services.s3.AmazonS3Client.invoke(AmazonS3Client.java:2619)

at com.amazonaws.services.s3.AmazonS3Client.getObject(AmazonS3Client.java:809)

at org.apache.hadoop.fs.s3native.Jets3tNativeFileSystemStore.retrievePair(Jets3tNativeFileSystemStore.java:266)

at org.apache.hadoop.fs.s3native.Jets3tNativeFileSystemStore.retrievePair(Jets3tNativeFileSystemStore.java:252)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:82)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:59)

at org.apache.hadoop.fs.s3native.$Proxy7.retrievePair(Unknown Source)

at org.apache.hadoop.fs.s3native.NativeS3FileSystem.open(NativeS3FileSystem.java:1002)

at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:420)

at org.apache.hadoop.mapred.LineRecordReader.(LineRecordReader.java:78)

at org.apache.hadoop.mapred.TextInputFormat.getRecordReader(TextInputFormat.java:51)

at org.apache.hadoop.hive.ql.io.CombineHiveRecordReader.(CombineHiveRecordReader.java:65)

... 16 more

2013-03-11 11:33:17,500 INFO org.apache.hadoop.mapred.Task (main): Runnning cleanup for the task

内容を確認すると、Error Message: The operation is not valid for the object’s storage class,

S3 Extended Request IDとなっており、ストレージクラスが良くないと言われています。

EMRの入力ログバケットを見ると、Glaceirになっているクラスがありました。

EMRはGlaceirファイルは処理できないため、エラーになっていたようです。

EMRで原因の分からないエラーが発生した場合、これからはEMRログのS3バケットを見るようにしようと思います。

こちらの記事はなかの人(memorycraft)監修のもと掲載しています。

元記事は、こちら